Ji Lin

Contact:

jilin.eecs AT gmail

I am a research scientist at OpenAI, working on multimodal, reasoning, and synthetic data. I contributed to o3/o4-mini, GPT-4o, GPT-4.1, GPT-4.5, Operator, 4o imagegen, etc.

💡 Read our latest blog post on visual reasoning: Thinking with images!

Previously, I completed my PhD at MIT EECS advised by Prof. Song Han. Before that, I received my B.Eng. in Electronic Engineering from Tsinghua University, and M.Sc. in EECS from MIT. I've interned/worked at Adobe Research, OmniML, and NVIDIA Research.

Publications [Full List]

* indicates equal contribution

|

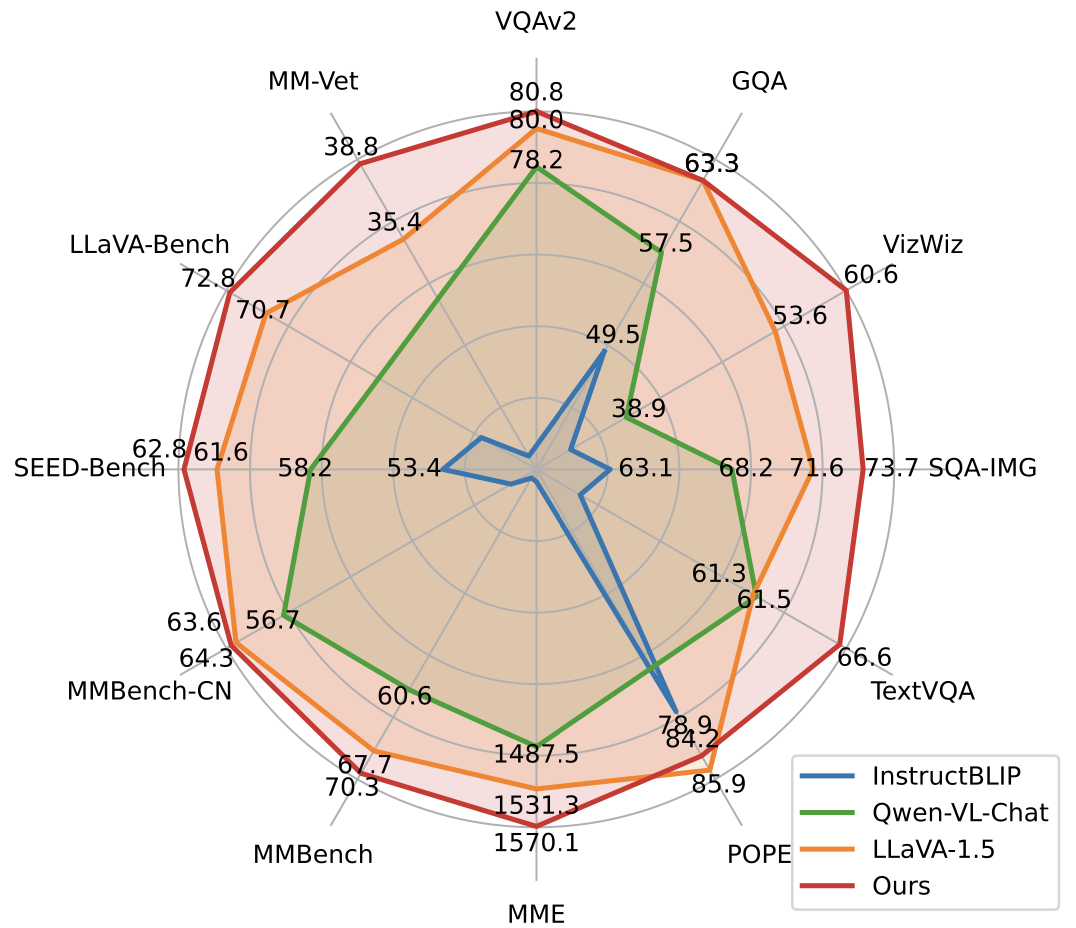

VILA: On Pre-training for Visual Language Models

|

|

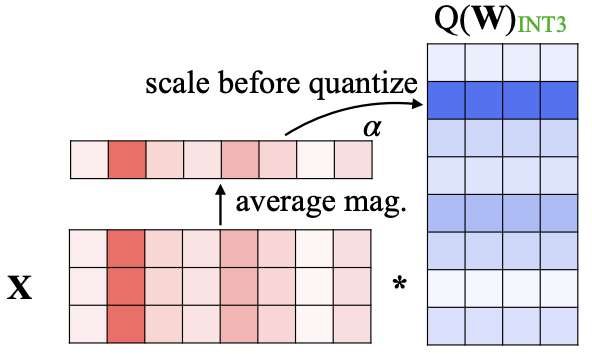

AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration

Integration:

NVIDIA TRT-LLM /

Intel Neural Compressor /

vLLM /

FastChat /

HuggingFace TGI /

LMDeploy /

FriendliAI

Best Paper Award

|

|

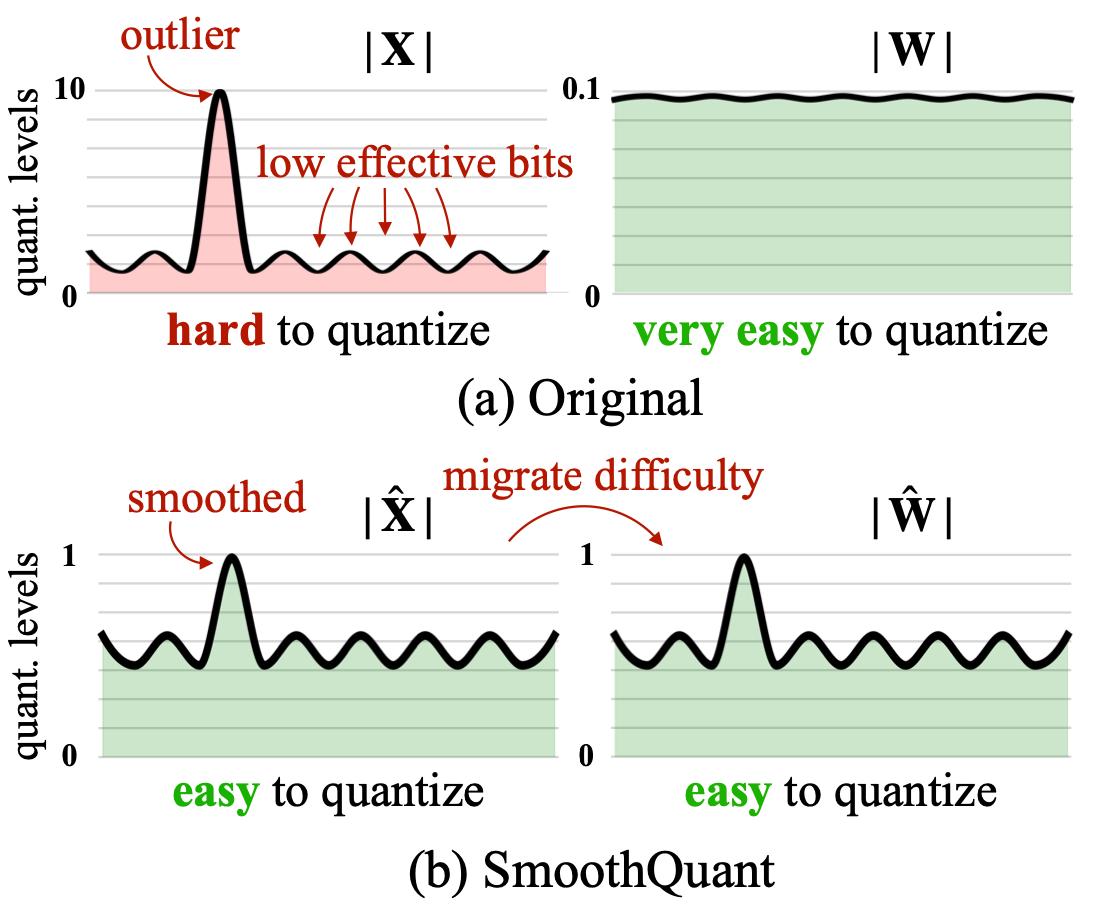

SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models

|

|

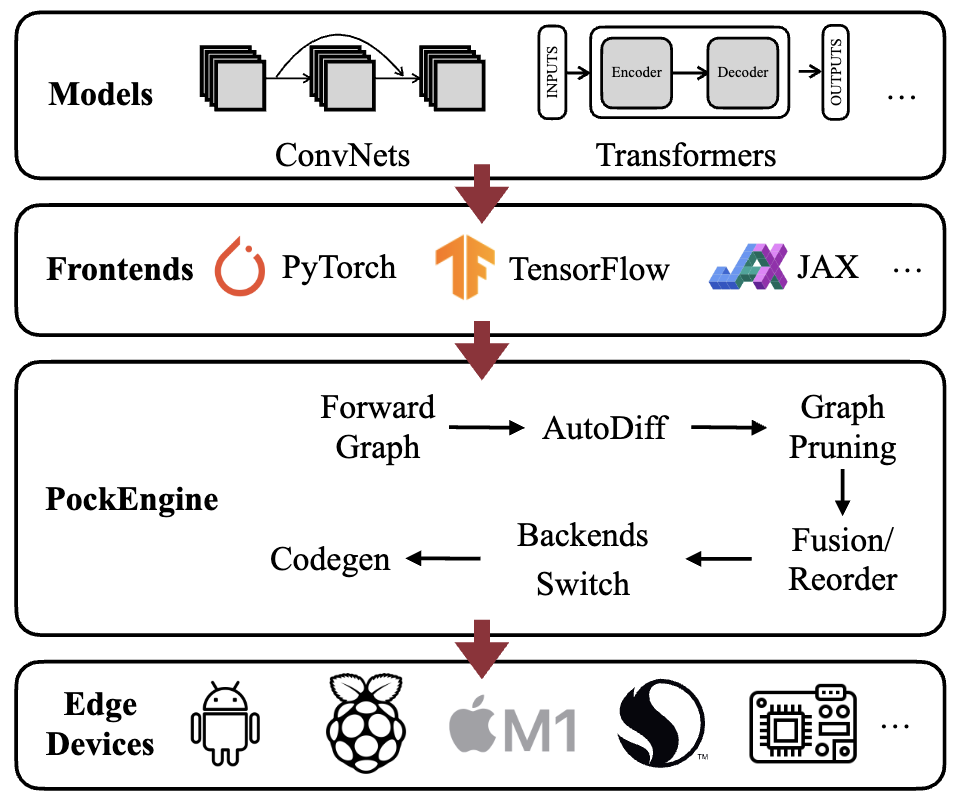

PockEngine: Sparse and Efficient Fine-tuning in a Pocket

|

|

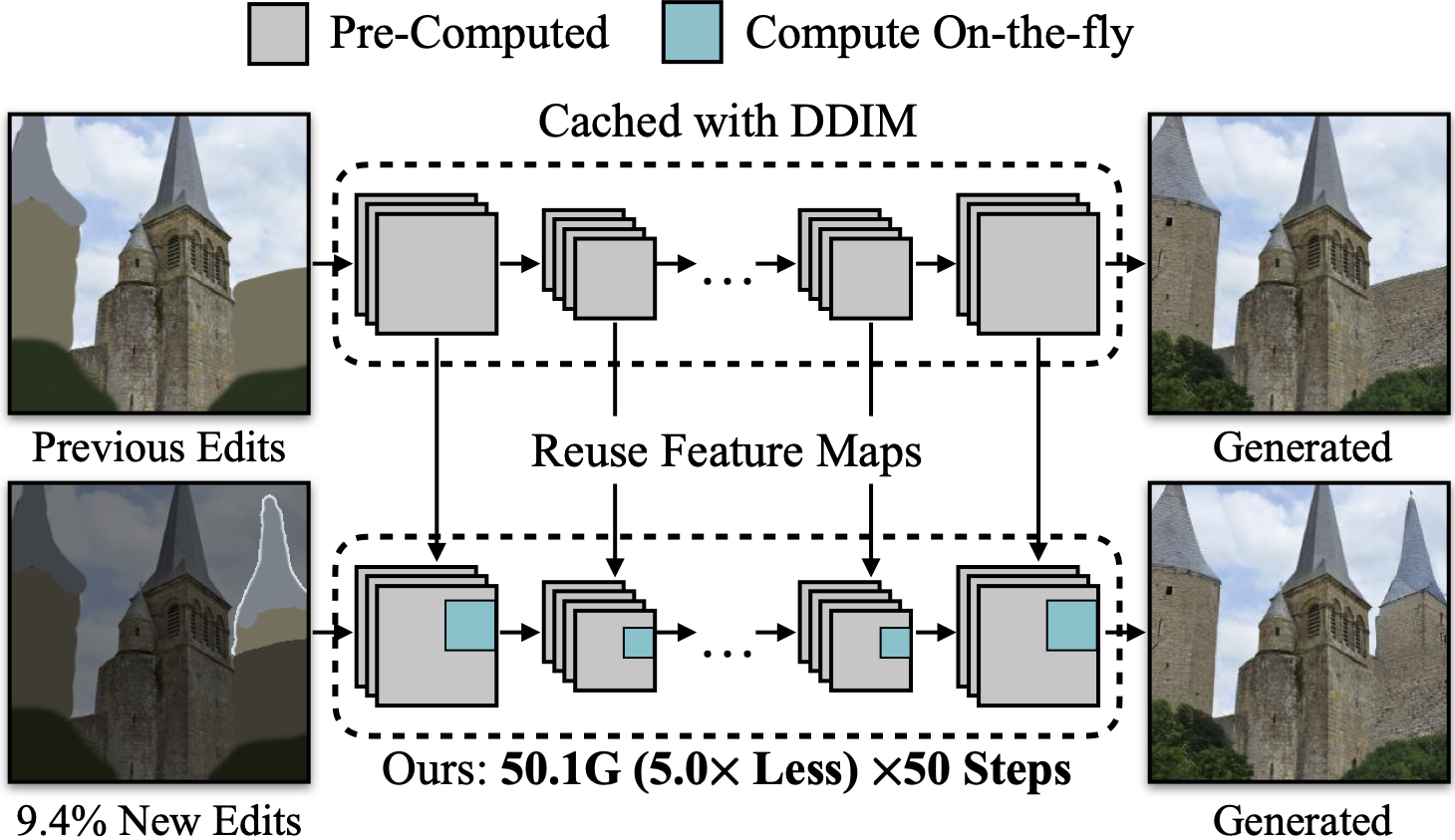

Efficient Spatially Sparse Inference for Conditional GANs and Diffusion Models

|

|

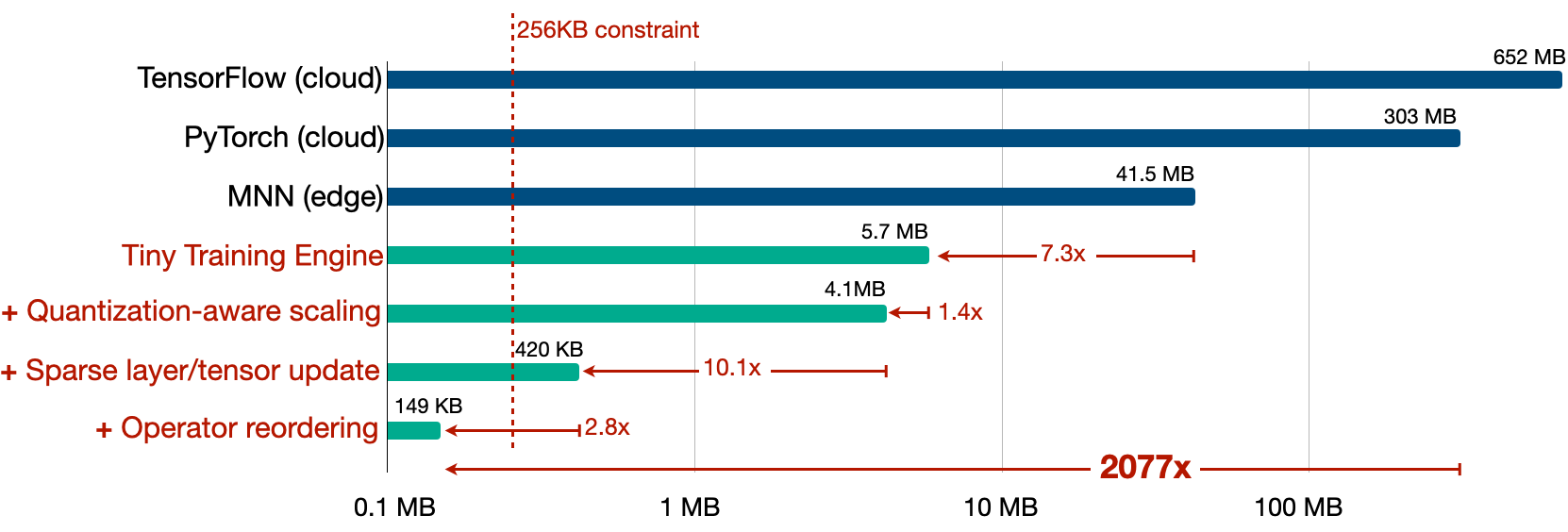

On-Device Training Under 256KB Memory

Press:

MIT News (homepage spotlight)

|

|

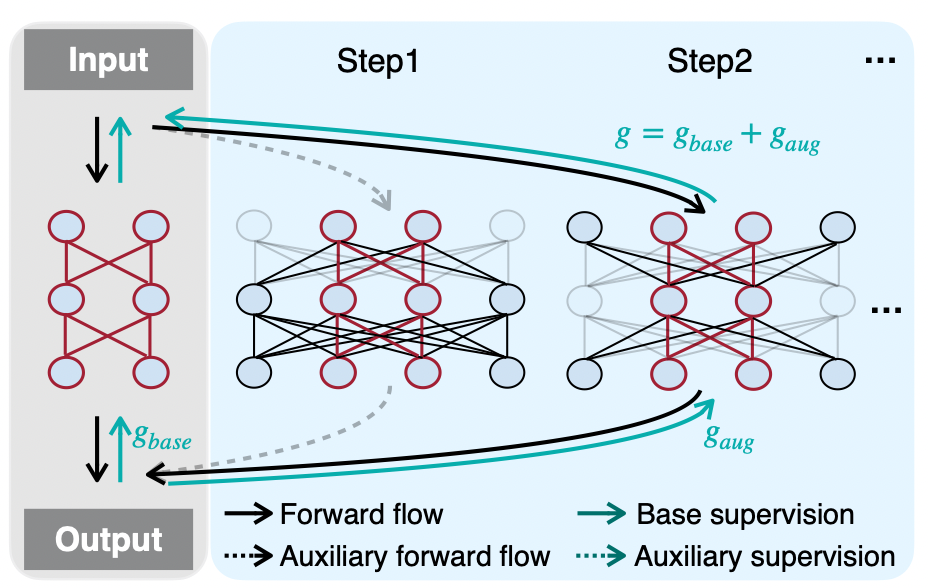

Network Augmentation for Tiny Deep Learning

|

|

MCUNetV2: Memory-Efficient Patch-based Inference for Tiny Deep Learning

|

|

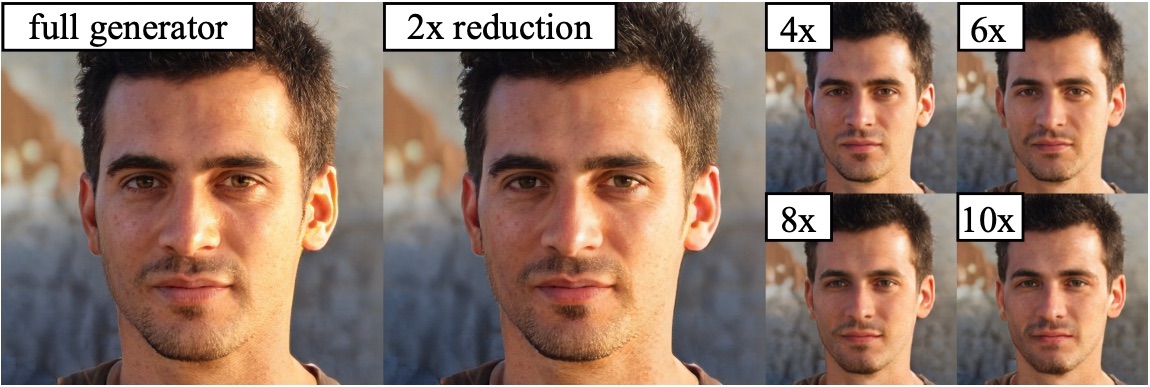

Anycost GANs for Interactive Image Synthesis and Editing

|

|

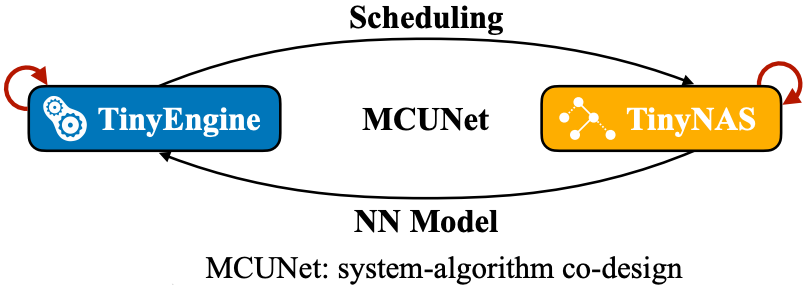

MCUNet: Tiny Deep Learning on IoT Devices

Press:

MIT News (homepage spotlight) /

WIRED /

MIT TR-China /

IBM /

Morning Brew /

Stacey on IoT /

Analytics Insight /

Techable /

Tendencias

|

|

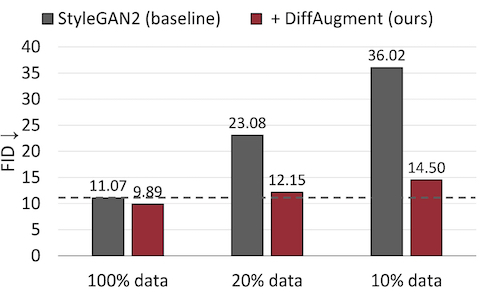

Differentiable Augmentation for Data-Efficient GAN Training

Press:

VentureBeat

|

|

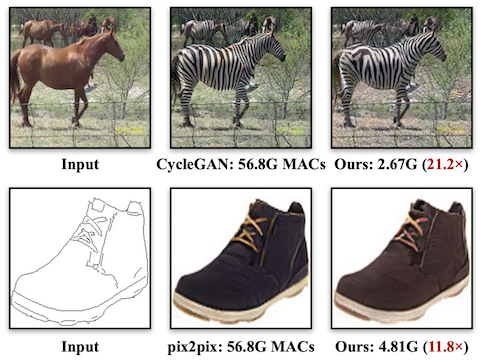

GAN Compression: Efficient Architectures for Interactive Conditional GANs

|

|

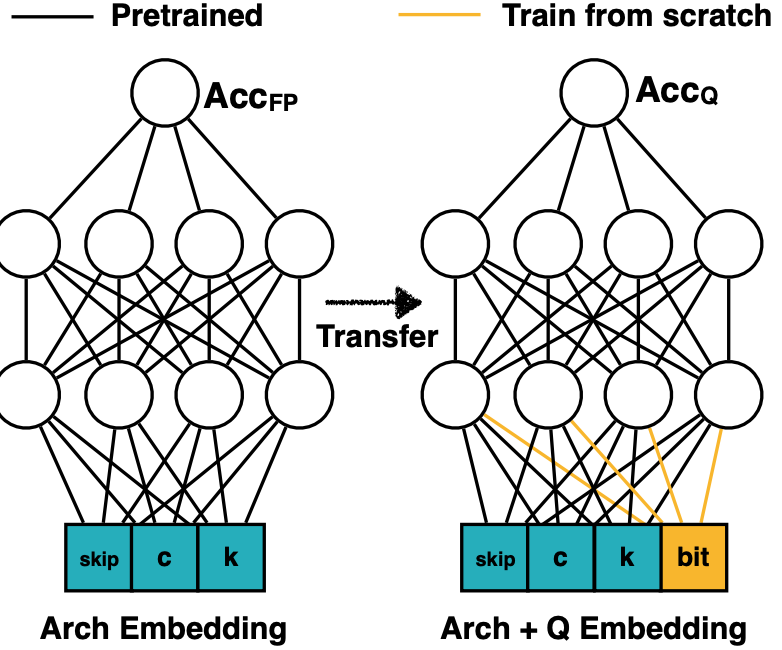

APQ: Joint Search for Network Architecture, Pruning and Quantization Policy

|

|

AutoML for Architecting Efficient and Specialized Neural Networks

|

|

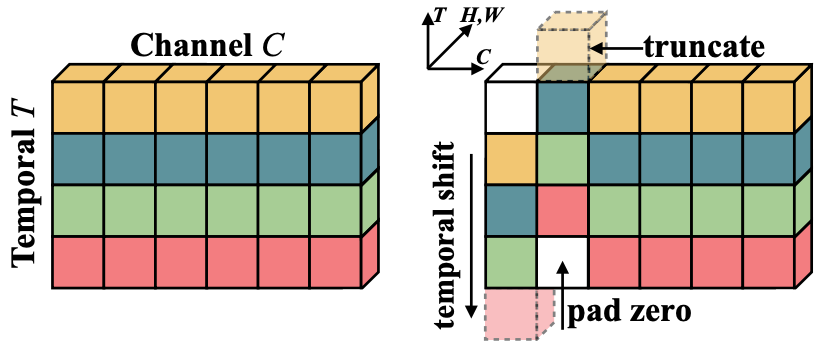

TSM: Temporal Shift Module for Efficient Video Understanding

ICCV 2019 /

arXiv

Training Kinetics in 15 Minutes: Large-scale Distributed Training on Videos

Press:

MIT News /

MIT Technology Review /

WIRED /

Engadget/

NVIDIA News /

Industry Integration@NVIDIA

|

|

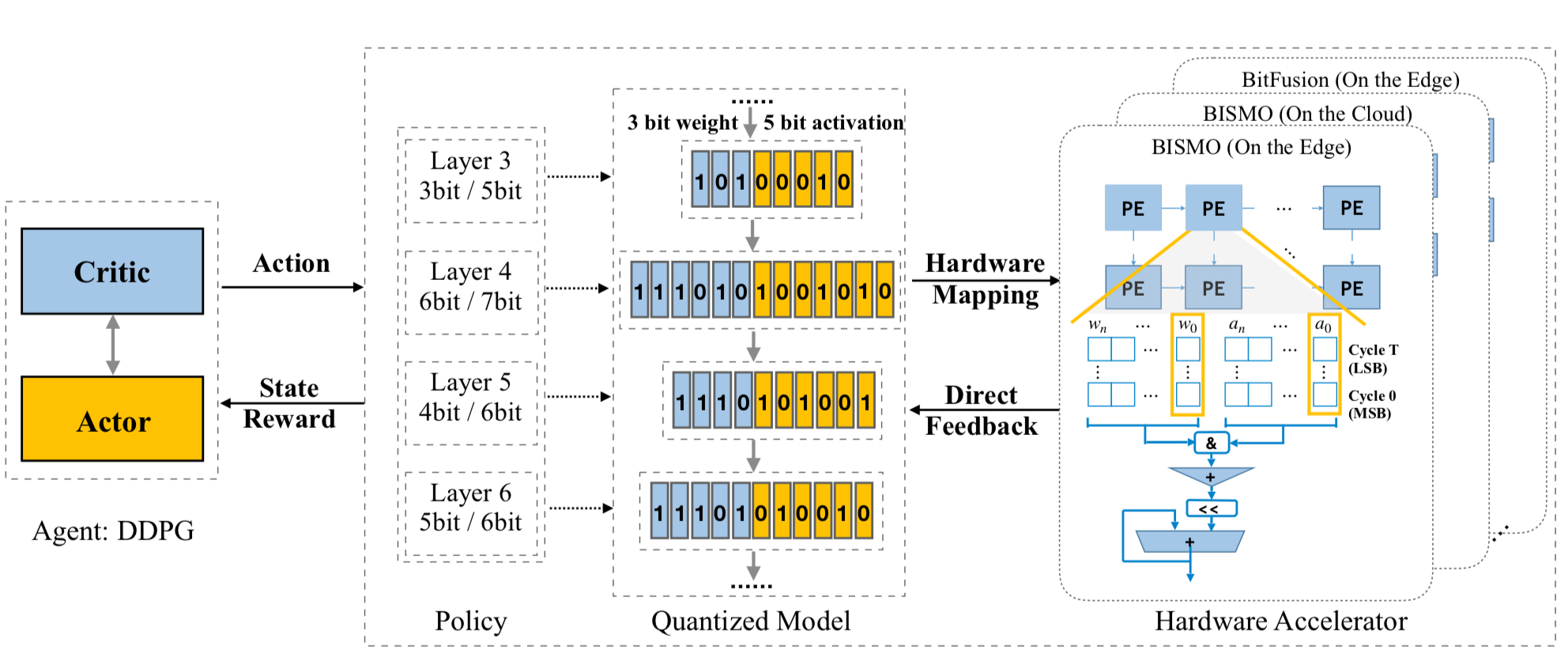

HAQ: Hardware-Aware Automated Quantization

Hardware-Centric AutoML for Mixed-Precision Quantization

|

|

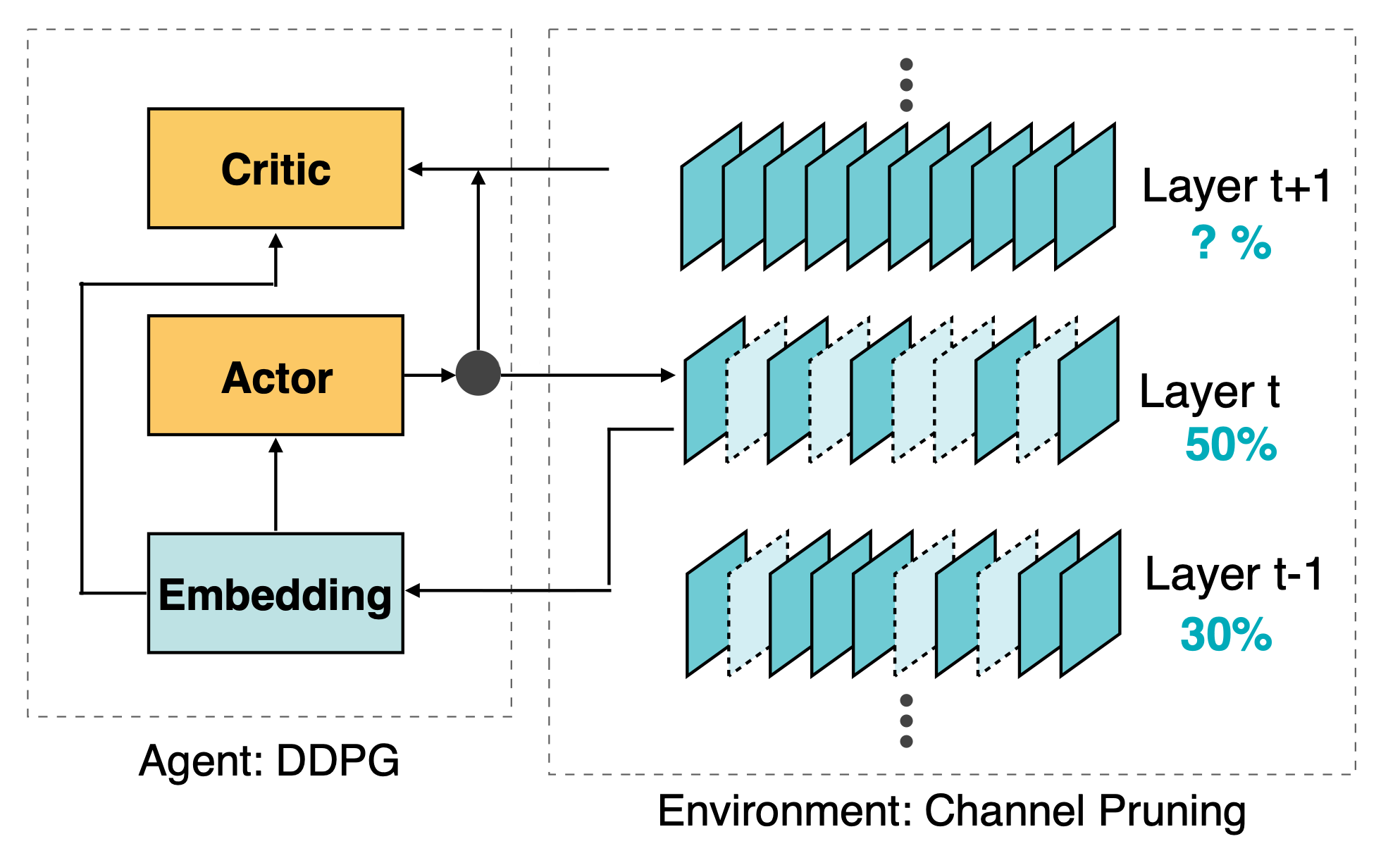

AMC: AutoML for Model Compression and Acceleration on Mobile Devices

|

|

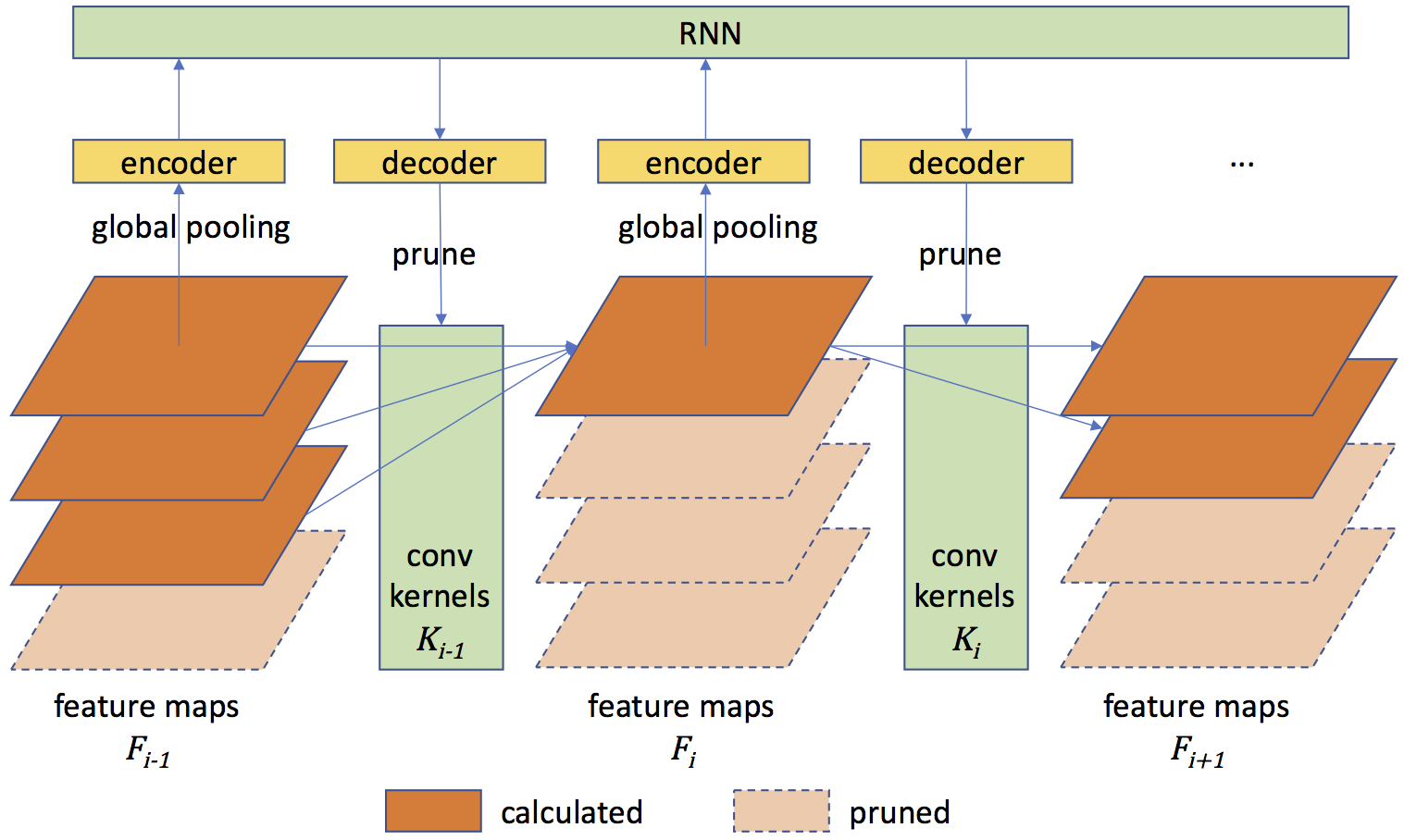

Runtime Neural Pruning

Runtime Network Routing for Efficient Image Classification

|

Academic Service

- Conference reviewer: ICLR, ICML, NeurIPS, CVPR, ICCV, ECCV, SIGGRAPH, IJCAI, AAAI, ACMMM, etc.

- Journel reviewer: T-PAMI, JMLR, T-MM, etc.